Access to generative AI is much more accessible than the adaptation of older technologies. What used to require training and further education as well as learning curves of varying steepness is now available at the touch of a button: ChatGPT and other technologies that are freely accessible and easy to adapt set the threshold to practically zero: anyone can use them, no one has to learn the basics first, and communication takes place in natural language.

This is an important opportunity for many companies to get started with GenAI, to use AI for data analyses of all kinds and to improve business processes and to gain initial experience with the technology, which has grown explosively in just a few months.

But beware: this simple introduction may obscure the complexity of the productive, company-wide and legally compliant use of AI in reality and how much companies need a cultural change to enable employees to utilise the opportunities offered by generative AI.

It needs an AI infrastructure, an IT landscape that is able to collect, aggregate and process data in such a way that GenAI can extract valid information from it. Specialists are needed who can go beyond normal language to get an AI to make useful statements and thus actually support the work in the company. And it needs governance that regulates the compliant, safe and productive use of AI in the company and, yes, sets limits where it threatens to violate ethical or legal principles.

The introduction of AI in the company can generally be accelerated from the bottom up due to the low-threshold access, as employees gain experience and create pressure for its introduction. Nevertheless, the company-wide introduction of GenAI is a matter for the boss and must be wanted and promoted by management.

Even at this stage, but even more so afterwards, it is up to the CIO to create the technical and procedural framework conditions for AI and to monitor compliance with them.

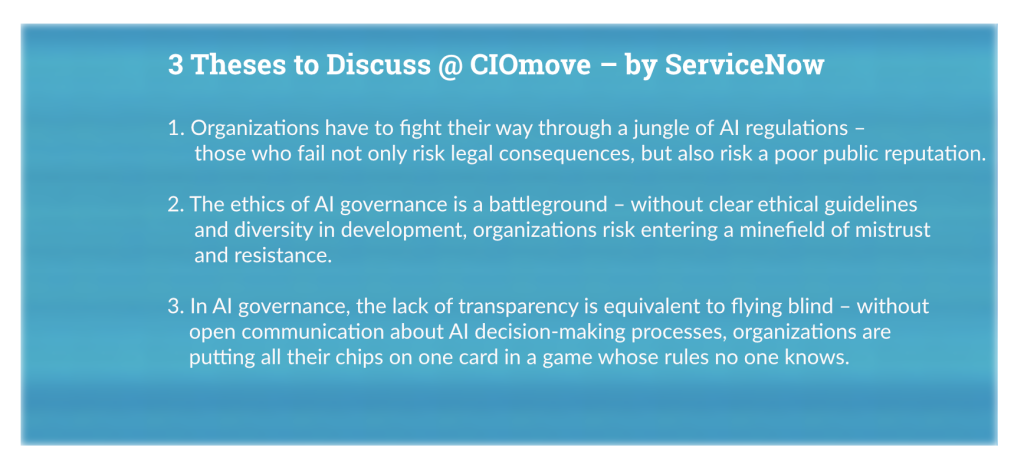

The effective governance of artificial intelligence is a business-critical issue, precisely because its introduction raises complex ethical, legal and social issues. Organisations therefore need effective AI governance frameworks that ensure the responsible and ethical use of AI, give their employees the necessary freedom to do so and at the same time mitigate the risks associated with bias, data breaches and unintended consequences.

All of this requires a comprehensive approach to AI governance that includes regulatory compliance, ethical considerations and stakeholder engagement.

What’s more, traditional governance approaches do not work here, or no longer work sufficiently, because they have changed the basis for productive use of AI to such an extent that new rules, new best practices and new ethical principles are required. CIOs have a role to play in all of this.